1. Introduction to Big Data Solutions

Digital transformation of businesses has resulted in an explosion in the amount of data we generate, store, and analyze. The wide range of available data termed “big data” encompasses everything from social media posts, location data, online transaction records, IoT sensor data and machine logs. Big data solutions are the techniques, technologies, and tools designed to manage, process, and extract value from this enormous volume of structured and unstructured data in support of business intelligence.

Big data solutions power decision-making, help drive innovation, and offer a new ways to gain and maintain a competitive edge. Companies that can harness the potential of big data stand to gain deeper insights into their operations, customer demands, and market dynamics. These insights can lead to improved decision-making, increased operational efficiencies, and the creation of new products and services that are better tailored to satisfy customer needs.

2. Traditional versus Modern Big Data Approaches

In the early days of computing, data was stored in hierarchical databases such as IBM IMS. These databases were well-suited for the relatively small amounts of structured data that businesses dealt with. Such databases could only be navigated using procedural logic so could not be used for decision support.

The next generation of databases such as IDMS based on the network model were also designed to support operational transactions but were easier to maintain than IDMS. Both the hierarchical and network databases had to be unloaded into flat files during the nightly batch run and then loaded into early decision support systems such as SAS to produce a very limited set of fixed management reports.

The next major shift was to relational database technology which used a normalized data model that supported many more queries to support decision making. Many companies such as Informix and Oracle tried to extend the relational model to support unstructured data such as geolocations, text, image and video, but their strength was and is still structured data.

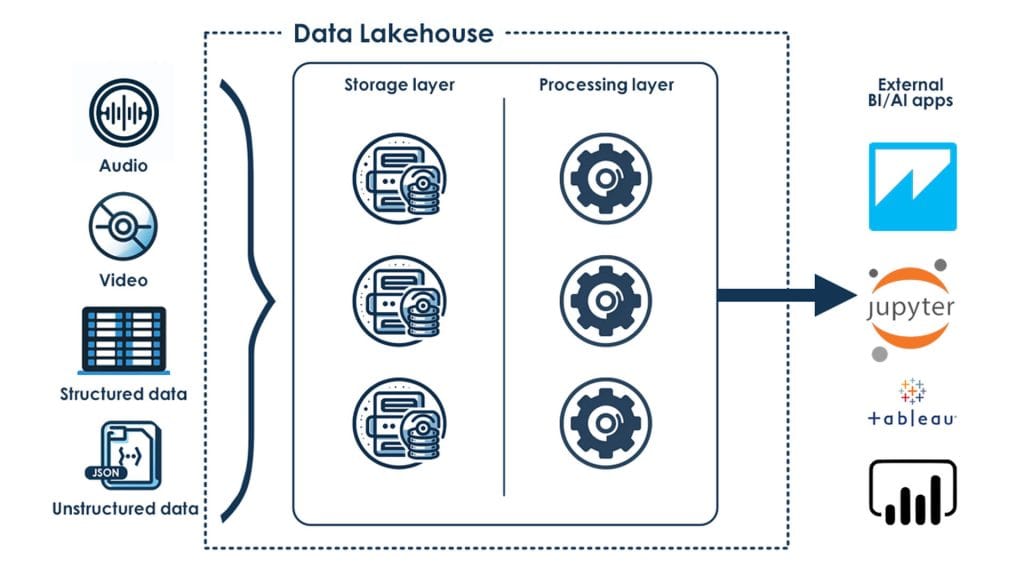

The need to store large volumes of high velocity and a great variety of modern data required gave rise to the Big Data movement which used low-cost Intel-based servers connected by high-speed interconnects into a Hadoop cluster. Apache Hadoop provided an open-source, scalable file system that could store both structured and unstructured data. Data lakes displaced Hadoop clusters as cloud data storage cost fell. Data warehouses still use the relational model with extensions and metadata to access in data lakes. These are often combined into virtual data lakehouses. Traditional nightly batch processing has evolved into real-time data processing to provide current data for decision-making.

Modern big data solutions offer several advantages over their traditional counterparts:

- Scalability: Modern solutions can handle petabytes of data with ease.

- Flexibility: They can manage both structured and unstructured data.

- Speed: Real-time data processing and analytics are now possible.

- Cost-Effectiveness: Open-source solutions like Hadoop and Spark have reduced the cost barriers to entry.

3. Types of Big Data Solutions

Big data solutions can be broadly categorized based on their primary function and the kind of data they handle. Here are the main types:

Data Lakes: These are storage repositories that can hold vast amounts of raw data in their native format until it’s needed. Data lakes can store structured, semi-structured, or unstructured data, making them versatile for various big data analytics needs.

Data Warehouses: Unlike data lakes, data warehouses store structured data. They are optimized for fast query performance and are used for business intelligence activities like data analysis and reporting. Examples include Actian Vector, Snowflake, and Redshift.

NoSQL Databases: These databases are designed to store, retrieve, and manage document-oriented or semi-structured data. Examples include MongoDB, Cassandra, and Couchbase.

Stream Processing Platforms: Tools like Apache Kafka and Apache Flink are designed to handle real-time data streams, allowing businesses to process and analyze data as it’s generated.

Machine Learning Platforms: These are solutions designed to create and manage machine learning models, which can predict future events based on historical data. Google’s TensorFlow and Microsoft’s Azure Machine Learning are popular choices.

4. Key Components of Big Data Architecture

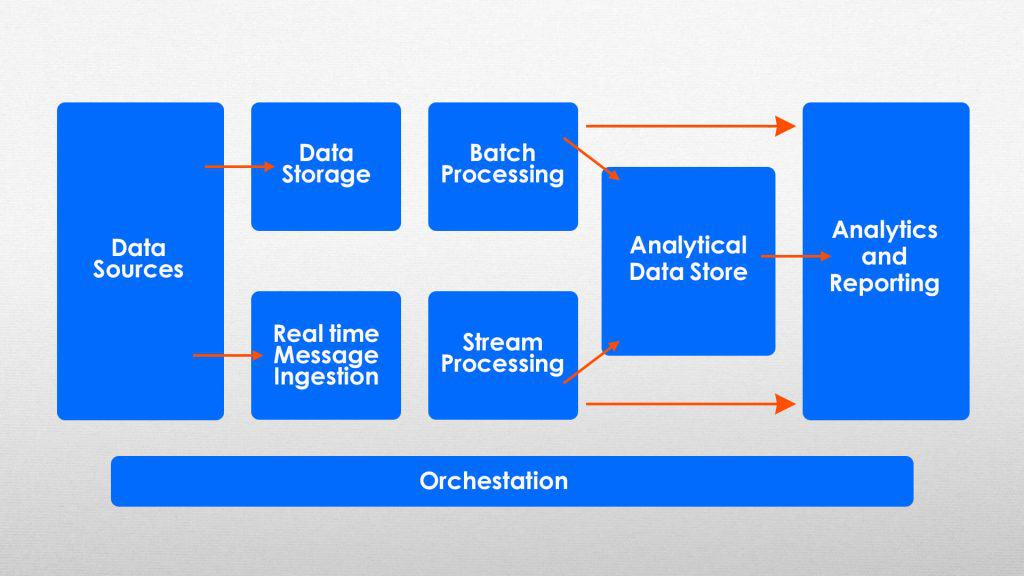

Any big data solution is underpinned by its architecture. This architecture is a blueprint for how data will be collected, stored, processed, and analyzed. Some of the key components of big data architecture include:

Data Sources: This is where the data originates. It could be website logs, databases, online transaction system exports, social media streams, or IoT device feeds.

Data Storage: Once collected, data needs to be stored. Modern big data solutions can use distributed storage systems like Hadoop’s HDFS or cloud storage solutions like Amazon S3 and Azure Blob storage.

Data Management: This involves cleaning, transforming, and processing the data to extract insights. Database Management Systems include Oracle, Ingres, and Informix.

Data Analysis and Reporting: Once processed, the data is analyzed to extract insights. This could involve everything from simple reporting and business intelligence gathering to advanced machine learning.

5. Where and Why Big Data Solutions are Used

Every industry has recognized the potential of harnessing vast amounts of data to drive innovation, efficiency, and growth. Let’s explore some applications of big data solutions across various sectors:

E-commerce

The e-commerce industry thrives on understanding customer preferences and behavior. Big data plays a pivotal role in this:

Customer Behavior Analysis: By analyzing browsing habits, purchase history, and feedback, businesses can gain a deeper understanding of their customers, allowing for more targeted marketing strategies.

Recommendation Systems: Ever wondered how platforms like Amazon seem to know exactly what you’re interested in? Big data algorithms analyze user behavior and preferences to suggest products, enhancing the shopping experience.

Inventory Management: Predictive analytics can forecast demand for specific products, helping businesses manage their inventory more efficiently and reduce overhead costs.

Healthcare

The healthcare sector is undergoing a revolution, with big data at its core:

Predictive Analytics: By analyzing health trends and data, it’s possible to predict potential outbreaks or health crises, allowing for proactive preparedness and faster response.

Patient Data Analysis: Personalized medicine is on the rise. By analyzing individual patient data, healthcare providers can offer treatments better tailored to individual needs.

Research: Big data aids in medical research by providing insights into patterns and correlations that can lead to breakthroughs in treatments and drug development.

Finance

The financial sector is very data driven, making big data solutions indispensable:

Fraud Detection: By analyzing transaction patterns, machine learning algorithms can detect unusual behavior, helping in the early detection and prevention of fraud.

Risk Management: Predictive models can assess the risk levels of investments, guiding investors and institutions in their decision-making processes.

Algorithmic Trading: High-frequency trading strategies rely on real-time data analysis to make split-second investment decisions.

Manufacturing

The manufacturing sector is leveraging big data to optimize operations and enhance product quality:

Predictive Maintenance: By analyzing sensor data, potential breakdowns can be predicted, allowing for timely maintenance and reducing downtime. High-value systems such as gas turbine engines often employ real-time digital twins to visualize complex systems.

Supply Chain Optimization: Big data can provide insights into demand patterns, helping manufacturers optimize their supply chains for efficiency and cost-effectiveness.

Quality Assurance: Real-time data analysis can detect anomalies in the manufacturing processes, ensuring consistent product quality.

Entertainment

The entertainment industry, especially streaming platforms, relies heavily on big data to get feedback and enhance user experiences:

- Content Recommendations: Platforms like Netflix and Spotify analyze user preferences, viewing habits, and feedback to suggest content, ensuring users always have something they’d love to watch or listen to.

- Viewer Preferences: By understanding what content resonates with their audience, producers can make informed decisions about future projects, ensuring higher viewer engagement and satisfaction.

Transportation and Logistics

The transportation sector is optimizing routes, reducing costs, and enhancing efficiency using big data:

- Route Optimization: By analyzing traffic patterns, weather conditions, and other factors, logistics companies can determine the most efficient routes, saving time and fuel.

- Demand Forecasting: Public transport services can adjust their schedules based on predicted demand, ensuring optimal service levels.

The adoption of big data solutions across industries is driven by the desire to be more informed, efficient, and responsive. The ability to analyze vast datasets offers a competitive edge, enabling businesses to anticipate challenges, seize opportunities, and earn high customer satisfaction scores.

6. Getting Started with Big Data

For businesses of all sizes can benefit from big data solutions. They need a strategy, a plan, and right resources to get going. Below are some pointers on getting started:

- Assess Your Needs: Before investing in any big data solution, understand your specific requirements. Are you looking to improve customer analytics, optimize business processes, or explore new revenue streams?

- Start Small: You don’t need to overhaul your entire system immediately. Begin with a pilot project, gauge its success, learn some lessons, and then expand.

- Skill Development: Big data requires a unique set of skills. Invest in training programs for your team. Familiarize them with tools like Hadoop, Spark, and data visualization platforms. Hadoop skills are particularly hard to find. Managed MPP data warehouses from the likes of Actian and Snowflake provide the scalability of Hadoop without the complexity.

- Data Governance: Ensure you have policies in place for data quality, data access, and data sharing. This will ensure consistency and reliability in your data.

Training and Certification Opportunities

Several institutions and platforms offer courses and certifications in big data:

- Coursera & Udemy: These platforms have courses on big data technologies, data science, and analytics.

- Certified Big Data Professional: Offered by the Data Science Council of America, this certification covers big data tools and practices.

- Hortonworks Certified Data Scientist: A hands-on certification for those proficient in the Hortonworks Data Platform.

Best Practice Guide for a Successful Big Data Implementation

- Data Integration: Ensure seamless integration between data sources and consuming applications across departments and lines of business, avoiding silos. Consider a data integration solution that replaces multiple high-maintenance point-to-point connections with an integration bus architecture.

- Security: With the increasing volume of data, especially sensitive data, security is paramount. Implement robust encryption of data at rest and in motion. Adopt role-based access management controls.

- Scalability: Your chosen big data solution should be scalable to accommodate growing data volumes. Cloud-based solutions scale transparently with little reliance on Hadoop skills.

- Collaboration: Encourage collaboration between data scientists, business analysts, and IT teams for holistic project execution. Identify data experts across the business to as they can be developed into data stewards and data publishers.

7. Top 10 Big Data Solutions: Features and Pricing

There are many options out there so it’s important to understand the features and pricing of the top solutions to make an informed decision. Here’s a deeper dive into the top 10 big data solutions available today:

1. Actian Cloud Data Platform

Actian is a leader in the analytics domain, and offers a comprehensive data platform tailored for data-driven businesses aiming to derive actionable insights from their data assets. Recognized for its scalability and performance, Actian has been at the forefront of data warehousing and analytics solutions. Its platform is designed to handle complex queries for real-time decision-making.

Features:

- Vector Database: Columnar data storage with parallel query processing.

- Hybrid Deployment: Flexibility to be deployed on-prem and multi-cloud.

- Data Integration: Built in data connectors and data pipeline orchestration.

- Scalability: In-server vertical scalability and across clustered servers.

Pricing: Customized based on deployment and features.

2. Hadoop

Developed by the Apache Software Foundation, Hadoop stands as a pillar in the big data realm. Originating from Google’s foundational papers on MapReduce and the Google File System, Hadoop is tailored to process extensive datasets by using clustered servers. Its architecture emphasizes cost, speed, and resilience, have made it an well-established choice for organizations venturing into big data analytics.

Features:

- HDFS: Efficiently stores vast data volumes.

- MPP architecture: Enables parallel data processing.

- Fault Tolerance: Ensures data protection and continuous processing.

- Scalability: Easily expandable with more nodes.

- Ecosystem: Includes projects like Hive and YARN.

Pricing: While core Hadoop is free, commercial versions like Cloudera offer added features, with variable pricing.

3. Spark

Emerging from the AMPLab at the University of California, Berkeley, Apache Spark has rapidly ascended the ranks to become synonymous with big data processing. Designed as an active versatile API to more than 50 data formats, make it a favorite among data scientists and engineers.

Features:

- In-memory Processing: To accelerate tasks.

- Libraries: Includes MLlib, Spark Streaming, and GraphX.

- DataFrame API: Simplifies data manipulation.

- Compatibility: Works with Hadoop’s YARN and HDFS.

Pricing: Spark is open-source. Vendors like Databricks offer managed services with variable pricing.

4. Google Cloud BigQuery

Originating from the tech giant Google, BigQuery stands as a testament to the company’s commitment to democratizing big data analytics. As a fully managed, serverless data warehouse, BigQuery allows businesses to run SQL-like queries against multi-terabyte datasets in mere seconds. Leveraging Google’s unparalleled infrastructure, it offers a hassle-free solution to data analytics, eliminating the need for database administration. BigQuery is great for data queries that access large datasets but lacks some of the flexibility that other cloud data warehouses offer for more data-to-day, smaller queries.

Features:

- Real-time Analytics: Immediate insights.

- Automatic Backup: Ensures data safety.

- Integration: Works with various Google Cloud services.

- Built-in Machine Learning: Simplifies predictive analytics.

Pricing: Pay-as-you-go model based on data processed. Storage costs are separate.

5. Azure Data Lake

Azure Data Lake, from Microsoft, is a core component in the company’s cloud computing arsenal. Designed to provide a scalable and secure data storage solution, it caters to businesses that require advanced analytics capabilities. With its ability to handle massive amounts of data and provide parallel processing, Azure Data Lake is tailored for enterprises that aim to harness the power of their data in real time.

Features:

- Parallel Processing: For large-scale data analytics.

- Integration: Works with other Azure services.

- U-SQL Scripting: Combines SQL and C#.

- Scalable Storage: Uses Azure Data Lake Storage Gen2.

Pricing: Charges based on data storage and analytics units consumed.

6. Oracle Big Data

Oracle, a name synonymous with enterprise database solutions, has its own comprehensive offering: Oracle Big Data. Designed to be a cohesive solution, it integrates seamlessly with Oracle’s vast suite of products. This integration ensures that businesses can leverage the power of big data without disrupting their existing Oracle-based workflows. With a focus on scalability, security, and performance, Oracle’s big data solution is tailored for enterprises that prioritize data-driven decision-making.

Features:

- Advanced Analytics: Includes predictive and spatial processing.

- Oracle Big Data SQL: Unified querying across multiple sources.

- Cloud Support: Flexible deployment options.

- Security: Emphasizes data protection.

Pricing: Customized based on deployment and features.

7. Amazon Redshift

Amazon Redshift, part of Amazon Web Services (AWS), is a fully managed data warehouse service. Redshift provides a platform that allows users to run complex queries and get results in seconds. Leveraging the vast infrastructure of AWS, Redshift is optimized for online analytic processing (OLAP), making it a go-to solution for businesses that need to analyze large datasets with lightning speed. With its compatibility with standard SQL and popular BI tools, transitioning to Redshift is smooth for businesses familiar with traditional relational databases.

Features:

- Petabyte-scale Storage: For vast datasets.

- Columnar Storage: Enhances performance.

- Automated Backups: Ensures data safety.

- Fast Performance: Uses parallel processing.

Pricing: Pay-as-you-go, with on-demand or reserved instance options.

8. Vertica Analytics Platform

Vertica Analytics Platform, developed by Vertica Systems (a division of Open Text), stands out as a high-performance analytics database designed for modern data-driven enterprises. Built from the ground up to handle today’s demanding big data workloads, Vertica offers a solution that combines speed, scalability, and simplicity. Its columnar storage architecture and parallel processing capabilities ensure that businesses can analyze their data in real-time, making it a preferred choice for organizations that prioritize data-driven decision-making.

Features:

- In-database Machine Learning: Direct model creation.

- Columnar Storage: Improves query performance.

- Eon Mode: Separates compute and storage.

- Hybrid Deployment: On-premises, cloud, or on Hadoop.

Pricing: Based on storage capacity, with enterprise and community editions.

9. SAS Big Data Analytics

SAS, a name synonymous with advanced analytics, has been empowering businesses with data-driven insights for decades. With the rise of big data, SAS has evolved its offerings to cater to the unique challenges posed by vast datasets. SAS Big Data Analytics is a testament to this evolution, combining the power of SAS’s analytical prowess with the demands of modern data landscapes.

Features:

- Advanced Data Visualization: Interactive dashboards.

- Machine Learning: Supports various algorithms.

- Predictive Analytics: Forecasts trends.

- Integration with Hadoop: Direct analytics on Hadoop clusters.

Pricing: Varies based on modules and deployment.

10. Splunk

Splunk stands out as a unique platform designed to harness the power of machine data, which is often voluminous and complex. This data, generated by devices, servers, networks, and applications, holds a wealth of insights, and Splunk is engineered to extract them. By converting machine data into actionable intelligence, Splunk empowers businesses to make informed decisions, optimize operations, and enhance security postures.

Features:

- Data Aggregation: Makes data searchable.

- Real-Time Monitoring: Offers instant alerts.

- Advanced Search: Detailed data exploration.

- Machine Learning Toolkit: Supports predictive analytics.

Pricing: Based on daily data ingestion, with various tiers available.

8. Real-world Applications and Use Cases

Big data solutions are about deriving real-world value from data. Here are some compelling use cases:

- Customer Insights: By analyzing customer data, businesses can understand purchasing habits, preferences, and pain points, allowing them to tailor their products, services, and marketing strategies.

- Supply Chain Optimization: Big data analytics can predict demand, optimize inventory levels, and reduce waste.

- Predictive Maintenance: By analyzing machine logs and sensor data, companies can predict when equipment will fail and perform maintenance proactively.

- Fraud Detection: Financial institutions use big data to detect unusual transactions that could indicate fraud.

- Personalized Marketing: Companies can create better-targeted marketing campaigns based on individual customer preferences and behaviors.

- Traffic Optimization: City planners can analyze traffic data to optimize traffic light timings, reduce congestion, and plan infrastructure.

- Energy Consumption Analysis: Utility companies can optimize energy distribution and forecast demand using big data.

- Research and Development: Pharmaceutical companies can analyze clinical trial data to speed up drug discovery.

- Real-time Analytics: Businesses can operate in-the-moment by making decisions on the fly by analyzing data in real-time, be it stock trading or e-commerce inventory management.

- Natural Language Processing: Used in chatbots and voice assistants to understand and process human language.

9. Challenges and Solutions in Big Data

The big data initiatives are not without hurdles. As businesses increasingly lean on data-driven insights for informed decisions, understanding and navigating these challenges becomes increasingly important. Below are some major obstacles in the big data along with strategies to surmount them:

Complexity

The multifaceted nature of big data, combined with the rapid technological evolution, makes it a challenging domain. Rising rising demand has made data scientists and big data experts highly sought after. To mitigate this, businesses can invest in training programs to upskill their current workforce and create citizen data analysts. Cloud platforms reduce the reliance on internal IT teams for deployment, management, and administration. Additionally, embracing big data platforms with user-friendly interfaces and automation features can simplify processes and reduce the need for specialized skills.

Security

In today’s digital era, data breaches and cyber threats are a constant concern. Protecting vast volumes of data, especially sensitive and personal information, is vital. Robust security measures, such as data encryption, multi-factor authentication, and strict access controls, are essential. Regular security audits and staying current with the latest security threats can further increase data protection.

Performance

With data volumes skyrocketing, ensuring swift data processing and real-time analytics is crucial. Handling enormous data sets without compromising on speed is a significant challenge. However, by adopting techniques like parallel processing and scalable on-demand cloud-platforms, high performance can be maintained. Solutions like Actian, Snowflake, and Redshift are known for rapid in-memory processing, so can be key choices for scalable data analytics.

Integration

Data today comes from an ever broader set of sources, from IoT devices to social media streams, resulting in a mix of structured and unstructured data. Merging this varied data into a unified system is no small feat. Data integration tools and middleware solutions can be invaluable in this context. Data lakes, which store data in its native format, can also aid in managing diverse data types. Adopting data stewardship and standardization practices ensures consistency and reduces data swamps.

Data Quality

The quality and accuracy of data is crucial for confident decision-making. Often, businesses grapple with noisy, incomplete, or irrelevant data. Implementing rigorous data validation checks and employing data cleansing tools can help maintain high data standards. Sourcing data from reliable and trusted sources further ensures its quality and relevance.

In essence, while big data brings its set of challenges, they aren’t insurmountable. With strategic solutions in place, businesses can fully harness the potential stored in their data assets.

10. Future Trends and Innovations in Big Data

The big data landscape is ever-evolving, driven by the accelerating pace of technological advancements and the insatiable appetite for deeper insights. As we look ahead, several trends and innovations stand out, these include:

AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are no longer just buzzwords; they are pivotal forces in the big data arena. Their integration into big data platforms is revolutionizing data analytics:

- Predictive Analysis: ML algorithms can sift through vast amounts of data to predict future trends with or without guidance.

- Automation: Routine data processing tasks, data cleansing, and even some complex data operations are being automated, reducing human intervention and errors.

- Personalization: From e-commerce product recommendations to personalized content on streaming platforms, AI-driven insights are enhancing user experiences like never before.

Real-time Data Processing

The need for real-time insights is in high demand. Whether it’s stock traders needing split-second updates or e-commerce platforms adjusting prices dynamically, the value of real-time data is undeniable:

- Instant Decision Making: For sectors like finance, healthcare, and emergency services, real-time data can be the difference between profit and loss, health and ailment, or even life and death.

- Enhanced Customer Experience: Businesses can engage customers better by understanding their real-time behavior, leading to more effective marketing strategies and improved customer satisfaction.

- Cryptography: Quantum computing poses both a threat and an opportunity in the realm of cryptography. While it can potentially break many of the current encryption methods, it also paves the way for quantum encryption techniques, heralding a new era of secure communication.

Edge Computing

With the proliferation of IoT devices, processing data at the source, or “edge,” is becoming increasingly vital:

- Reduced Latency: By processing data on the device itself or a local server, edge computing offers faster response times, crucial for applications like autonomous vehicles or industrial robots.

- Bandwidth Efficiency: Transmitting vast amounts of raw data over networks can be bandwidth-intensive. Edge computing allows for initial processing at the source, reducing the data that needs to be transmitted.

Augmented Reality (AR) and Virtual Reality (VR)

AR and VR are set to play a more significant role in data visualization:

- Immersive Data Interaction: Imagine walking through a 3D representation of your data, analyzing patterns, and making decisions. AR and VR can make this a reality, offering an entirely new way to interact with data.

- Training and Simulations: From training new employees to simulating complex scenarios, AR and VR can provide a rich, immersive experience, enhancing understanding and retention.

11. Conclusion

Digital transformation is increasing the opportunities to explore rich new insights. Big data solutions are pervasive. They empower businesses to make better-informed decisions, understand customer behavior, and stay ahead in the competition. By investing in the right tools, training, and strategies, businesses can unlock the true potential of big data.